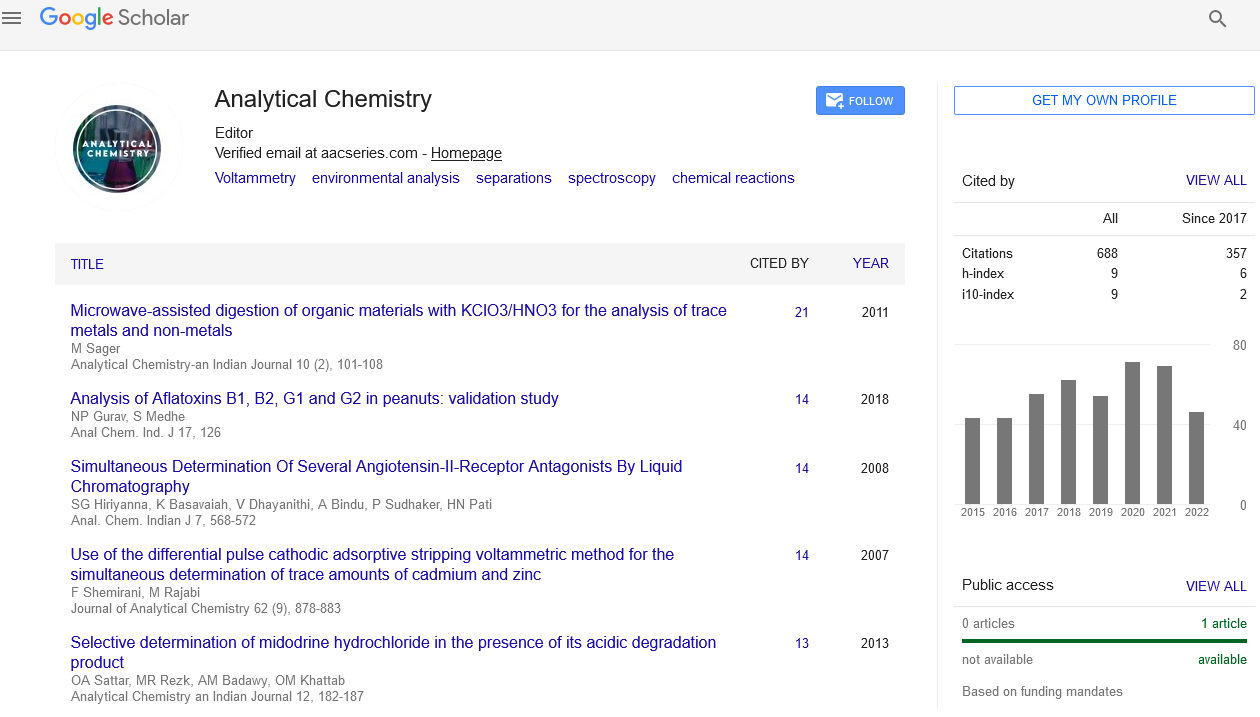

Editorial

, Volume: 25( 2)Understanding the Limit of Detection (LOD) in Analytical Chemistry

The limit of detection (LOD) is a fundamental parameter in analytical chemistry that defines the lowest concentration of an analyte that can be reliably distinguished from background noise. LOD plays a critical role in evaluating the sensitivity and suitability of analytical methods across chemical, pharmaceutical, environmental, and clinical applications. This article provides a comprehensive overview of the concept of LOD, its importance, factors influencing its determination, and common approaches used for its estimation. Understanding LOD is essential for selecting appropriate analytical techniques, optimizing method performance, ensuring regulatory compliance, and making informed scientific decisions. Quantification Keywords: Limit of Detection, Sensitivity, Analytical Chemistry, Signal-to-Noise Ratio, Calibration Curve, Method Validation, Low-Level

Abstract

The limit of detection (LOD) is a key performance characteristic of analytical methods, representing the smallest amount or concentration of an analyte that can be detected but not necessarily quantified with acceptable accuracy. In analytical chemistry, the ability to detect trace levels of substances is crucial for applications ranging from environmental monitoring of pollutants to detecting impurities in pharmaceuticals and biomarkers in clinical samples. LOD is influenced by several factors, including instrumental sensitivity, baseline noise, sample preparation procedures, and the physicochemical properties of the analyte. Accurately determining the LOD ensures that analytical results are meaningful, especially when the presence of an analyte at very low levels carries significant scientific, medical, or regulatory implications. Common approaches to determining LOD include statistical methods based on the standard deviation of blank measurements, calibration curve analysis, and the widely used signal-to-noise (S/N) ratio technique. For example, an S/N ratio of 3:1 is often considered the minimum requirement for detection. The calibration curve method typically relies on the standard deviation of the response and the slope of the curve to compute the LOD, reflecting both noise and method sensitivity. Advances in analytical Citation: Adrian Mitchell. Advances and Applications of Chromatography in Modern Analytical Chemistry. Anal Chem Ind J.. 3(3):132. 1 © 2021 Trade Science Inc. www.tsijournals.com | December-2021 instrumentation, such as high-resolution mass spectrometry, spectrophotometry, chromatography, and electrochemical sensors, have contributed to dramatically lower detection limits, enabling detection at micro-, nano-, and even picogram levels. Despite these technological advancements, proper sample handling, method optimization, and rigorous validation remain essential to achieving a reliable and reproducible LOD. Understanding the limitations associated with LOD is equally important, as incorrect estimation can lead to misinterpretation of data, false positives, or failure to detect substances that may pose health or environmental risks. As analytical demands increase with stricter regulations and the need for trace-level analysis, the concept of LOD continues to be central to method development, quality control, and scientific research. Conclusion The limit of detection is a vital measure of an analytical method’s sensitivity and plays an essential role in diverse areas of chemical, pharmaceutical, environmental, and clinical analysis. Accurate determination of the LOD ensures that analytical results are trustworthy, especially when detecting trace-level substances. By understanding the principles, influencing factors, and calculation methods of LOD, analysts can develop and validate robust methods capable of meeting the rigorous demands of modern scientific and regulatory environments. As technology continues to evolve, the continuous improvement of LOD determination will further enhance the precision and reliability of analytical measurements.